Scraping the Database

At first glance, one would expect this database to be simple to scrape: the search queries are returned in a tabular form perfect for scraping. Unfortunately, the scraping proved more difficult than anticipated. However, before delving into the scraping itself, I will first describe my initial overall strategies for accessing all of the names in the database.

One non-trivial question is how to access all of the names in the database. An attempt to query the search field with all of the fields blank did not successfully return all 4.5 million names. Therefore, I determined that the best ultimate strategy for scraping all 4.5 million names would be to perform a search by country: searching for all victims within Poland (with no restrictions on names) returns 3,533,220 names, for example. Thus, to access all 4.5 million names, I could simply iterate over all countries with entries in the database.

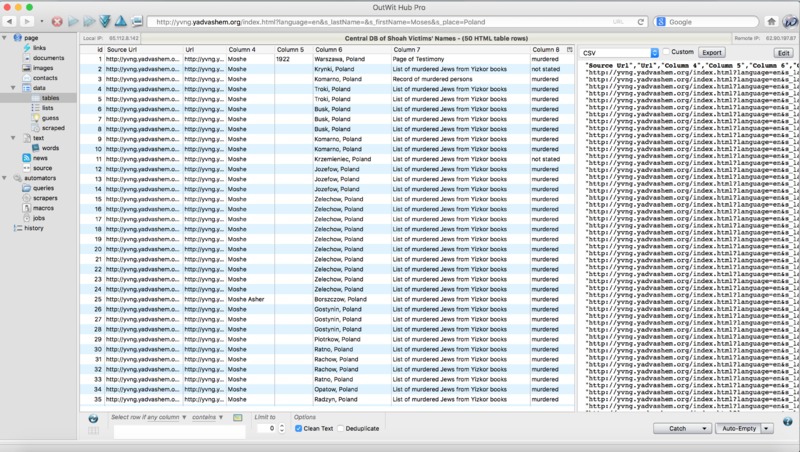

With this plan in mind, I settled on a small "training set" of names by creating a search for all people with the First Name: Moses and Place of Residence: Poland. This returned a much more tractable 113,101 names, spread over 2263 pages (each page contains 50 names):

Here is the URL to this search: http://yvng.yadvashem.org/index.html?language=en&s_lastName=&s_firstName=Moses&s_place=Poland

At this point, I was ready to attempt to use OutWit Hub to scrape the names. I opted for OutWit Hub over the Chrome Scraper extension because OutWit Hub provides more flexiblity in terms of being able to iterate over multiple pages.

I began by attempting to use OutWit Hub's built-in "tables" feature to scrape the site. In particular, I loaded the webpage:

Navigating to the "tables" tab on the left menu of Outwit Hub, we find that Outwit Hub has automatically scraped the first page of the search results in a form directly exportable as a .csv file, as seen below:

However, this is where complications began to emerge. Importantly, the URL for this specfic search is as follows: http://yvng.yadvashem.org/index.html?language=en&s_lastName=&s_firstName=Moses&s_place=Poland

Note that even though the search query returned 2263 pages of search results, the URL does not reflect the current page number in the URL. This is because the table is supported by a Javascript backend.

Of course, this presents challenges for scraping: if the URL had been updated to reflect the current page number, it would have been straightforward to configure a macro in OutWit Hub to iterate over all such URLs and scrape the table. The only current clear method for circumventing this issue is to click manually through all of the pages within OutWit Hub, allowing OutWit Hub to scrape each page. However, this is infeasible for the approximately 90,000 pages that would have to be clicked through individually.

Gabe, Jeremy, and I have explored methods of circumventing this issue. With Nathaniel's help, we explored using Beautiful Soup, a Python web scraper. However, after experimenting with this, we realized that Beautiful Soup dose not support Javascript, and therefore, it is not possible to access the dynamic contents of the Javascript table using Beautiful Soup alone. Jeremy found dryscrape, another Python scraping library; this scraping library seems promising, in that Jeremy was able to scrape the first page of the table using only a handful of lines of Python code. One possible solution would be to figure out how to select a JSON object by ID number, as I know the ID number of the "next page" button.

However, these issues are on hold because I have been in email communication with the director of the Yad Vashem Names Database to obtain a subset of the dataset directly, without having to scrape the site. The director has kindly offered to provide me with information on approximately 5,000 names. I decided that it would be best to select a subset of the Bistums (dioceses) that I described in the biographies section. In particular, having these names would allow for interesting cross-correlations between the Jewish populations and clergy in those locations. I have requsted the names from the following 10 cities, with the number of names per city listed in parentheses:

- Aachen (~850)

- Augsburg (~600)

- Freiburg (~600)

- Fulda (~650)

- Limburg (~100)

- Meissen (< 50)

- Muenster (~400)

- Osnabrueck (~200)

- Paderborn (~300)

- Trier (~700)

This amounts to approximately 4,500 records. I will update this section as developments are made.